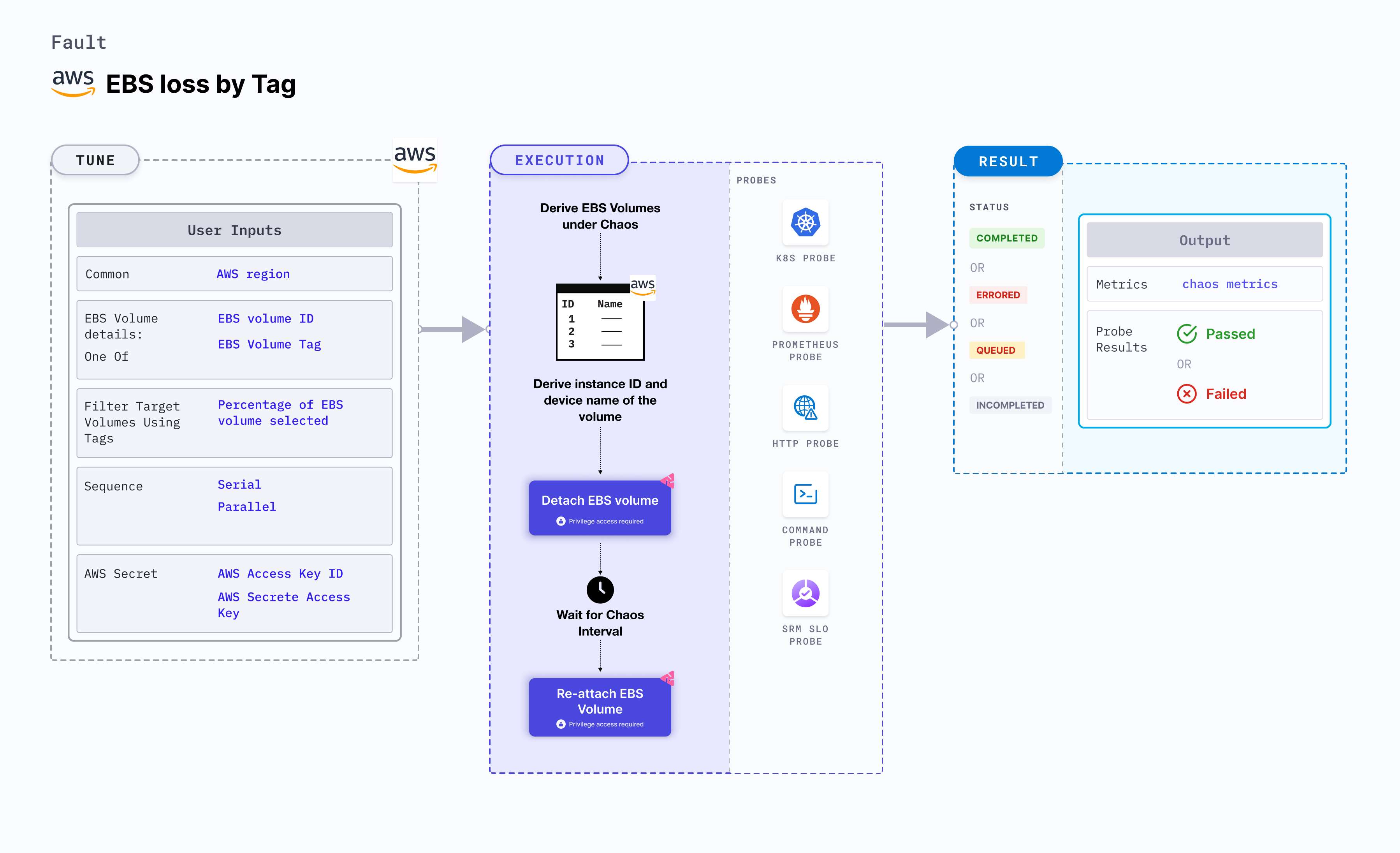

EBS loss by tag

EBS (Elastic Block Store) loss by tag disrupts the state of EBS volume by detaching it from the node (or EC2) instance using volume ID for a certain duration. In case of EBS persistent volumes, the volumes can self-attach, and the re-attachment step can be skipped.

Use cases

EBS loss by tag tests the deployment sanity (replica availability and uninterrupted service) and recovery workflows of the application pod.

Prerequisites

- Kubernetes >= 1.17

- EBS volume is attached to the instance.

- Appropriate AWS access to attach or detach an EBS volume for the instance.

- The Kubernetes secret should have AWS access configuration (key) in the

CHAOS_NAMESPACE. A sample secret file looks like:apiVersion: v1

kind: Secret

metadata:

name: cloud-secret

type: Opaque

stringData:

cloud_config.yml: |-

# Add the cloud AWS credentials respectively

[default]

aws_access_key_id = XXXXXXXXXXXXXXXXXXX

aws_secret_access_key = XXXXXXXXXXXXXXX

HCE recommends that you use the same secret name, that is, cloud-secret. Otherwise, you will need to update the AWS_SHARED_CREDENTIALS_FILE environment variable in the fault template and you won't be able to use the default health check probes.

Below is an example AWS policy to execute the fault.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:AttachVolume",

"ec2:DetachVolume"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": "ec2:DescribeVolumes",

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ec2:DescribeInstanceStatus",

"ec2:DescribeInstances"

],

"Resource": "*"

}

]

}

- Go to AWS named profile for chaos to use a different profile for AWS faults and superset permission or policy to execute all AWS faults.

- Go to the common tunables and AWS-specific tunables to tune the common tunables for all faults and AWS-specific tunables.

Mandatory tunables

| Tunable | Description | Notes |

|---|---|---|

| EBS_VOLUME_TAG | Common tag for target volumes. It is in the format key:value (for example, 'team:devops'). For more information, go to target single volume. | |

| REGION | Region name for the target volumes | For example, us-east-1. |

Optional tunables

| Tunable | Description | Notes |

|---|---|---|

| VOLUME_AFFECTED_PERC | Percentage of total EBS volumes to target | Default: 0 (corresponds to 1 volume), provide numeric value only. For more information, go to target percentage of volumes. |

| AWS_SHARED_CREDENTIALS_FILE | Path to the AWS secret credentials. | Default: /tmp/cloud_config.yml. |

| TOTAL_CHAOS_DURATION | Time duration for chaos insertion (sec) | Default: 30 s . For more information, go to duration of the chaos. |

| CHAOS_INTERVAL | The time duration between the attachment and detachment of the volumes (sec) | Default: 30 s. For more information, go to chaos interval. |

| SEQUENCE | Sequence of chaos execution for multiple volumes | Default value: parallel. Supports serial and parallel. For more information, go to sequence of chaos execution. |

| RAMP_TIME | Period to wait before and after injection of chaos in sec | For example, 30 s. For more information, go to ramp time. |

Target single volume

Random EBS volume that is detached from the node, based on the given EBS_VOLUME_TAG tag and REGION region.

The following YAML snippet illustrates the use of this environment variable:

# contains the tags for the EBS volumes

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

chaosServiceAccount: litmus-admin

experiments:

- name: ebs-loss-by-tag

spec:

components:

env:

# tag of the EBS volume

- name: EBS_VOLUME_TAG

value: 'key:value'

# region for the EBS volume

- name: REGION

value: 'us-east-1'

- name: TOTAL_CHAOS_DURATION

VALUE: '60'

Target percent of volumes

Percentage of EBS volumes that are detached from the node, based on EBS_VOLUME_TAG tag and REGION region. Tune it by using the VOLUME_AFFECTED_PERC environment variable.

The following YAML snippet illustrates the use of this environment variable:

# target percentage of the EBS volumes with the provided tag

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

chaosServiceAccount: litmus-admin

experiments:

- name: ebs-loss-by-tag

spec:

components:

env:

# percentage of EBS volumes filter by tag

- name: VOLUME_AFFECTED_PERC

value: '100'

# tag of the EBS volume

- name: EBS_VOLUME_TAG

value: 'key:value'

# region for the EBS volume

- name: REGION

value: 'us-east-1'

- name: TOTAL_CHAOS_DURATION

VALUE: '60'